DeepSeek 是国内顶尖 AI 团队「深度求索」开发的多模态大模型,具备数学推理、代码生成等深度能力,堪称"AI界的六边形战士"。

DeepSeek 身上的标签有很多,其中最具代表性的标签有以下两个:

- 低成本(不挑硬件、开源)高性能(推理能力极强、回答准确)

终于不用翻墙,就能用到世界级水平的AI了!!!

DeepSeek太强了:

DeepSeek-R1 在后训练阶段大规模使用了强化学习技术,在仅有极少标注数据的情况下,极大提升了模型推理能力。在数学、代码、自然语言推理等任务上,性能比肩 OpenAI o1 正式版。

性能对齐海外领军闭源模型:DeepSeek-V3 为自研 MoE 模型,671B 参数,激活 37B,在 14.8T token 上进行了预训练。

论文链接:https://github.com/deepseek-ai/DeepSeek-V3/blob/main/DeepSeek_V3.pdf

DeepSeek-V3 多项评测成绩超越了 Qwen2.5-72B 和 Llama-3.1-405B 等其他开源模型,并在性能上和世界顶尖的闭源模型 GPT-4o 以及 Claude-3.5-Sonnet 不分伯仲。

DeepSeek太火了,作为一个研发,不自己部署一个是不是缺点意思?!

AI军备竞赛已经开始打响,作为普通人无法避开科技浪潮,如何不让自己随波逐流,或许我们需要躬身入局去体验,思考和探索。

话不多说,Just do it !

在本地部署 DeepSeek 只需要以下三步:

- 安装 Ollama部署 DeepSeek安装 open-webui【非必须,可选】

Ollama、DeepSeek 和 Open Web UI 三者之间的关系如下:

Ollama

- 定义:Ollama 是一个用于本地运行和管理大型语言模型(LLM)的开源工具,旨在简化在本地计算机上部署、运行和管理大型语言模型的流程。它支持多种操作系统(如 macOS、Linux 和 Windows),并提供了丰富的功能,使开发者、研究人员以及对数据隐私有较高要求的用户能够轻松地在本地环境中使用和定制大语言模型。Ollama 允许用户在本地运行多种开源大语言模型,如 LLaMA 2、DeepSeek、Mistral、Gemma 等,无需依赖云端服务,从而更好地保护数据隐私并降低延迟。功能:Ollama 提供了对模型的管理功能,包括模型的下载、运行、参数调整等。它还支持多种模型,包括 DeepSeek。

DeepSeek

- 定义:DeepSeek 是一个开源的大型语言模型,由 Moonshot AI 开发。它在 2025 年 1 月发布了 DeepSeek-R1 推理大模型。功能:DeepSeek 提供了强大的语言生成能力,可以用于多种自然语言处理任务。它可以通过 Ollama 进行本地部署和管理。

Open Web UI

- 定义:Open Web UI 是一个开源的 Web 界面工具,旨在提供一个用户友好的界面来与本地运行的 AI 模型进行交互。功能:Open Web UI 提供了可视化交互界面,支持多种 LLM 运行程序,包括 Ollama。它允许用户通过浏览器与 Ollama 管理的模型(如 DeepSeek)进行交互,并提供了丰富的功能,如 Markdown 和 LaTeX 支持。

三者的关系

- Ollama 是一个“大管家”,用于管理和运行 AI 模型的工具,就像一个大模型的治理工具,DeepSeek 是一个具体的 AI 模型,是一个“超级大脑”,可以回答我们各种问题,可以通过 Ollama 进行管理和运行。Open Web UI 是一个可视化界面工具,是一个“聊天工具”,用于与 Ollama 管理的模型(如 DeepSeek)进行交互,让我们更方便地和DeepSeek交流。它为用户提供了一个更友好的操作界面。类似的可视化界面工具还有ChatBox。

先看下效果:

环境:6c16G

DeepSeek版本介绍

模型参数规模典型用途CPU 建议GPU 建议内存建议 (RAM)磁盘空间建议适用场景1.5b (15亿)小型推理、轻量级任务4核以上 (Intel i5 / AMD Ryzen 5)可选,入门级 GPU (如 NVIDIA GTX 1650, 4GB 显存)8GB10GB 以上 SSD小型 NLP 任务、文本生成、简单分类7b (70亿)中等推理、通用任务6核以上 (Intel i7 / AMD Ryzen 7)中端 GPU (如 NVIDIA RTX 3060, 12GB 显存)16GB20GB 以上 SSD中等规模 NLP、对话系统、文本分析14b (140亿)中大型推理、复杂任务8核以上 (Intel i9 / AMD Ryzen 9)高端 GPU (如 NVIDIA RTX 3090, 24GB 显存)32GB50GB 以上 SSD复杂 NLP、多轮对话、知识问答32b (320亿)大型推理、高性能任务12核以上 (Intel Xeon / AMD Threadripper)高性能 GPU (如 NVIDIA A100, 40GB 显存)64GB100GB 以上 SSD大规模 NLP、多模态任务、研究用途70b (700亿)超大规模推理、研究任务16核以上 (服务器级 CPU)多 GPU 并行 (如 2x NVIDIA A100, 80GB 显存)128GB200GB 以上 SSD超大规模模型、研究、企业级应用671b (6710亿)超大规模训练、企业级任务服务器级 CPU (如 AMD EPYC / Intel Xeon)多 GPU 集群 (如 8x NVIDIA A100, 320GB 显存)256GB 或更高1TB 以上 NVMe SSD超大规模训练、企业级 AI 平台

根据电脑的配置的选择合适的DeepSeek版本。

本次部署1.5b的。1.5b,1.5Billion,15亿参数规模。

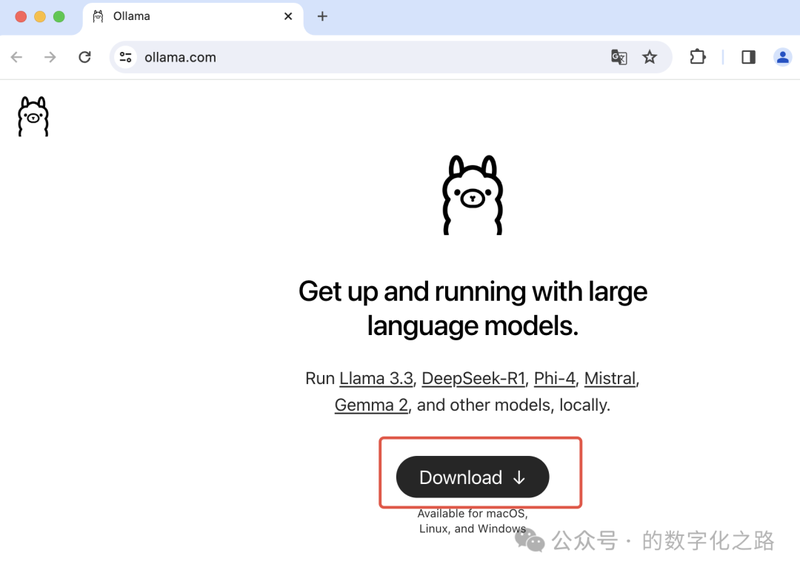

安装 Ollama

本次使用Homebrew安装:

brew install ollama% brew install ollama

==> Auto-updating Homebrew...

Adjust how often this is run with HOMEBREW_AUTO_UPDATE_SECS or disable with

HOMEBREW_NO_AUTO_UPDATE. Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).

^C==> Downloading https://formulae.brew.sh/api/formula.jws.json

########################################################################################################################################## 100.0%

==> Downloading https://formulae.brew.sh/api/formula_tap_migrations.jws.json

########################################################################################################################################## 100.0%

==> Downloading https://formulae.brew.sh/api/cask.jws.json

########################################################################################################################################## 100.0%

==> Downloading https://formulae.brew.sh/api/cask_tap_migrations.jws.json

########################################################################################################################################## 100.0%

Warning: Treating ollama as a formula. For the cask, use homebrew/cask/ollama or specify the `--cask` flag. To silence this message, use the `--formula` flag.

Warning: You are using macOS 12.

We (and Apple) do not provide support for this old version.

It is expected behaviour that some formulae will fail to build in this old version.

It is expected behaviour that Homebrew will be buggy and slow.

Do not create any issues about this on Homebrew's GitHub repositories.

Do not create any issues even if you think this message is unrelated.

Any opened issues will be immediately closed without response.

Do not ask for help from Homebrew or its maintainers on social media.

You may ask for help in Homebrew's discussions but are unlikely to receive a response.

Try to figure out the problem yourself and submit a fix as a pull request.

We will review it but may or may not accept it.

==> Fetching dependencies for ollama: cmake and go

==> Fetching cmake

==> Downloading https://raw.githubusercontent.com/Homebrew/homebrew-core/1dcb0baf4a6816ea98006489556cb4dae04b8453/Formula/c/cmake.rb

########################################################################################################################################## 100.0%

==> Downloading https://github.com/Kitware/CMake/releases/download/v3.31.5/cmake-3.31.5.tar.gz

==> Downloading from https://objects.githubusercontent.com/github-production-release-asset-2e65be/537699/9ec949a1-2626-4bc3-a60a-e7655dc83b43?X-A

########################################################################################################################################## 100.0%

==> Fetching go

==> Downloading https://raw.githubusercontent.com/Homebrew/homebrew-core/1dcb0baf4a6816ea98006489556cb4dae04b8453/Formula/g/go.rb

########################################################################################################################################## 100.0%

==> Downloading https://storage.googleapis.com/golang/go1.20.14.darwin-amd64.tar.gz

########################################################################################################################################## 100.0%

==> Downloading https://go.dev/dl/go1.23.6.src.tar.gz

==> Downloading from https://dl.google.com/go/go1.23.6.src.tar.gz

########################################################################################################################################## 100.0%

==> Fetching ollama

==> Downloading https://raw.githubusercontent.com/Homebrew/homebrew-core/1dcb0baf4a6816ea98006489556cb4dae04b8453/Formula/o/ollama.rb

########################################################################################################################################## 100.0%

==> Cloning https://github.com/ollama/ollama.git

Cloning into '/Users/cheng.tang/Library/Caches/Homebrew/ollama--git'...

==> Checking out tag v0.5.7

HEAD is now at a420a453 fix default modelfile for create (#8452)

==> Installing dependencies for ollama: cmake and go

==> Installing ollama dependency: cmake

==> Patching

==> ./bootstrap --prefix=/usr/local/Cellar/cmake/3.31.5 --no-system-libs --parallel=12 --datadir=/share/cmake --docdir=/share/doc/cmake --mandir=

==> make

==> make install

==> Downloading https://formulae.brew.sh/api/formula.jws.json

########################################################################################################################################## 100.0%

🍺 /usr/local/Cellar/cmake/3.31.5: 3,767 files, 62.7MB, built in 12 minutes 42 seconds

==> Installing ollama dependency: go

==> ./make.bash

==> /usr/local/Cellar/go/1.23.6/bin/go install std cmd

🍺 /usr/local/Cellar/go/1.23.6: 13,239 files, 272.3MB, built in 3 minutes 57 seconds

==> Installing ollama

==> go generate ./...

==> go build -ldflags=-s -w -X=github.com/ollama/ollama/version.Version=0.5.7 -X=github.com/ollama/ollama/server.mode=release

==> Caveats

To start ollama now and restart at login:

brew services start ollama

Or, if you don't want/need a background service you can just run:

/usr/local/opt/ollama/bin/ollama serve

==> Summary

🍺 /usr/local/Cellar/ollama/0.5.7: 8 files, 25.3MB, built in 2 minutes 35 seconds

==> Running `brew cleanup ollama`...

Disable this behaviour by setting HOMEBREW_NO_INSTALL_CLEANUP.

Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).

==> `brew cleanup` has not been run in the last 30 days, running now...

Disable this behaviour by setting HOMEBREW_NO_INSTALL_CLEANUP.

Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).

Removing: /Users/cheng.tang/Library/Caches/Homebrew/ca-certificates--2024-11-26.all.bottle.tar.gz... (133.8KB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/go--gobootstrap--1.20.14.tar.gz... (95.5MB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/sqlite--3.47.1.tar.gz... (3.2MB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/xz--5.6.3.tar.gz... (2.2MB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/go_mod_cache... (11,864 files, 281MB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/go_cache... (7,337 files, 865.9MB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/Cask/docker--4.36.0,175267.dmg... (509MB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/Cask/wireshark--4.4.2.dmg... (66.0MB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/api-source/Homebrew/homebrew-core/ba88823a4b85f26db08da252d4350d197cc27f9f/Formula/[email protected]... (7.1KB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/api-source/Homebrew/homebrew-core/ba88823a4b85f26db08da252d4350d197cc27f9f/Formula/pkgconf.rb... (3.3KB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/api-source/Homebrew/homebrew-core/ba88823a4b85f26db08da252d4350d197cc27f9f/Formula/[email protected]... (21.8KB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/api-source/Homebrew/homebrew-core/ba88823a4b85f26db08da252d4350d197cc27f9f/Formula/sqlite.rb... (2.8KB)

Removing: /Users/cheng.tang/Library/Caches/Homebrew/api-source/Homebrew/homebrew-core/ba88823a4b85f26db08da252d4350d197cc27f9f/Formula/xz.rb... (2.3KB)

Removing: /Users/cheng.tang/Library/Logs/Homebrew/[email protected]... (17 files, 5.2MB)

Removing: /Users/cheng.tang/Library/Logs/Homebrew/sqlite... (6 files, 216KB)

Removing: /Users/cheng.tang/Library/Logs/Homebrew/xz... (7 files, 1.3MB)

Removing: /Users/cheng.tang/Library/Logs/Homebrew/pkgconf... (7 files, 206.5KB)

Removing: /Users/cheng.tang/Library/Logs/Homebrew/openssl@3... (7 files, 7.9MB)

Removing: /Users/cheng.tang/Library/Logs/Homebrew/ca-certificates... (64B)

==> Caveats

==> ollama

To start ollama now and restart at login:

brew services start ollama

Or, if you don't want/need a background service you can just run:

/usr/local/opt/ollama/bin/ollama serve还有其它安装可选:

https://ollama.com/

部署 DeepSeek

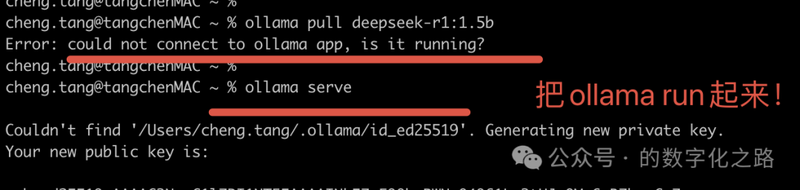

step2.1:新打开一个窗口,执行命令

ollama serve% ollama serve

Couldn't find '/Users/cheng.tang/.ollama/id_ed25519'. Generating new private key.

Your new public key is:

ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAINk5ZvF90hyPWNuQ4961Ln3tUJyOMv6nBZhygGoZaxex

2025/02/08 19:16:52 routes.go:1187: INFO server config env="map[HTTPS_PROXY: HTTP_PROXY: NO_PROXY: OLLAMA_DEBUG:false OLLAMA_FLASH_ATTENTION:false OLLAMA_GPU_OVERHEAD:0 OLLAMA_HOST:http://127.0.0.1:11434 OLLAMA_KEEP_ALIVE:5m0s OLLAMA_KV_CACHE_TYPE: OLLAMA_LLM_LIBRARY: OLLAMA_LOAD_TIMEOUT:5m0s OLLAMA_MAX_LOADED_MODELS:0 OLLAMA_MAX_QUEUE:512 OLLAMA_MODELS:/Users/cheng.tang/.ollama/models OLLAMA_MULTIUSER_CACHE:false OLLAMA_NOHISTORY:false OLLAMA_NOPRUNE:false OLLAMA_NUM_PARALLEL:0 OLLAMA_ORIGINS:[http://localhost https://localhost http://localhost:* https://localhost:* http://127.0.0.1 https://127.0.0.1 http://127.0.0.1:* https://127.0.0.1:* http://0.0.0.0 https://0.0.0.0 http://0.0.0.0:* https://0.0.0.0:* app://* file://* tauri://* vscode-webview://*] OLLAMA_SCHED_SPREAD:false http_proxy: https_proxy: no_proxy:]"

time=2025-02-08T19:16:52.378+08:00 level=INFO source=images.go:432 msg="total blobs: 0"

time=2025-02-08T19:16:52.378+08:00 level=INFO source=images.go:439 msg="total unused blobs removed: 0"

time=2025-02-08T19:16:52.381+08:00 level=INFO source=routes.go:1238 msg="Listening on 127.0.0.1:11434 (version 0.5.7)"

time=2025-02-08T19:16:52.382+08:00 level=INFO source=routes.go:1267 msg="Dynamic LLM libraries" runners=[cpu]

time=2025-02-08T19:16:52.383+08:00 level=INFO source=types.go:131 msg="inference compute" id="" library=cpu variant=avx2 compute="" driver=0.0 name="" total="16.0 GiB" available="4.8 GiB"

[GIN] 2025/02/08 - 19:17:13 | 200 | 2.442449ms | 127.0.0.1 | HEAD "/"

time=2025-02-08T19:17:15.376+08:00 level=INFO source=download.go:175 msg="downloading aabd4debf0c8 in 12 100 MB part(s)"

time=2025-02-08T19:23:16.349+08:00 level=INFO source=download.go:175 msg="downloading 369ca498f347 in 1 387 B part(s)"

time=2025-02-08T19:23:17.923+08:00 level=INFO source=download.go:175 msg="downloading 6e4c38e1172f in 1 1.1 KB part(s)"

time=2025-02-08T19:23:19.652+08:00 level=INFO source=download.go:175 msg="downloading f4d24e9138dd in 1 148 B part(s)"

time=2025-02-08T19:23:21.174+08:00 level=INFO source=download.go:175 msg="downloading a85fe2a2e58e in 1 487 B part(s)"

[GIN] 2025/02/08 - 19:23:26 | 200 | 6m12s | 127.0.0.1 | POST "/api/pull"

[GIN] 2025/02/08 - 19:23:45 | 200 | 29.715µs | 127.0.0.1 | HEAD "/"

[GIN] 2025/02/08 - 19:23:45 | 200 | 32.797546ms | 127.0.0.1 | POST "/api/show"

time=2025-02-08T19:23:45.403+08:00 level=INFO source=server.go:104 msg="system memory" total="16.0 GiB" free="3.3 GiB" free_swap="0 B"

time=2025-02-08T19:23:45.406+08:00 level=INFO source=memory.go:356 msg="offload to cpu" layers.requested=-1 layers.model=29 layers.offload=0 layers.split="" memory.available="[3.3 GiB]" memory.gpu_overhead="0 B" memory.required.full="1.5 GiB" memory.required.partial="0 B" memory.required.kv="224.0 MiB" memory.required.allocations="[1.5 GiB]" memory.weights.total="976.1 MiB" memory.weights.repeating="793.5 MiB" memory.weights.nonrepeating="182.6 MiB" memory.graph.full="299.8 MiB" memory.graph.partial="482.3 MiB"

time=2025-02-08T19:23:45.416+08:00 level=INFO source=server.go:376 msg="starting llama server" cmd="/usr/local/bin/ollama runner --model /Users/cheng.tang/.ollama/models/blobs/sha256-aabd4debf0c8f08881923f2c25fc0fdeed24435271c2b3e92c4af36704040dbc --ctx-size 8192 --batch-size 512 --threads 6 --no-mmap --parallel 4 --port 56752"

time=2025-02-08T19:23:45.426+08:00 level=INFO source=sched.go:449 msg="loaded runners" count=1

time=2025-02-08T19:23:45.426+08:00 level=INFO source=server.go:555 msg="waiting for llama runner to start responding"

time=2025-02-08T19:23:45.427+08:00 level=INFO source=server.go:589 msg="waiting for server to become available" status="llm server error"

time=2025-02-08T19:23:45.453+08:00 level=INFO source=runner.go:936 msg="starting go runner"

time=2025-02-08T19:23:45.454+08:00 level=INFO source=runner.go:937 msg=system info="CPU : SSE3 = 1 | SSSE3 = 1 | LLAMAFILE = 1 | AARCH64_REPACK = 1 | CPU : SSE3 = 1 | SSSE3 = 1 | LLAMAFILE = 1 | AARCH64_REPACK = 1 | cgo(clang)" threads=6

time=2025-02-08T19:23:45.455+08:00 level=INFO source=runner.go:995 msg="Server listening on 127.0.0.1:56752"

llama_model_loader: loaded meta data with 26 key-value pairs and 339 tensors from /Users/cheng.tang/.ollama/models/blobs/sha256-aabd4debf0c8f08881923f2c25fc0fdeed24435271c2b3e92c4af36704040dbc (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = qwen2

llama_model_loader: - kv 1: general.type str = model

llama_model_loader: - kv 2: general.name str = DeepSeek R1 Distill Qwen 1.5B

llama_model_loader: - kv 3: general.basename str = DeepSeek-R1-Distill-Qwen

llama_model_loader: - kv 4: general.size_label str = 1.5B

llama_model_loader: - kv 5: qwen2.block_count u32 = 28

llama_model_loader: - kv 6: qwen2.context_length u32 = 131072

llama_model_loader: - kv 7: qwen2.embedding_length u32 = 1536

llama_model_loader: - kv 8: qwen2.feed_forward_length u32 = 8960

llama_model_loader: - kv 9: qwen2.attention.head_count u32 = 12

llama_model_loader: - kv 10: qwen2.attention.head_count_kv u32 = 2

llama_model_loader: - kv 11: qwen2.rope.freq_base f32 = 10000.000000

llama_model_loader: - kv 12: qwen2.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 13: general.file_type u32 = 15

llama_model_loader: - kv 14: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 15: tokenizer.ggml.pre str = qwen2

llama_model_loader: - kv 16: tokenizer.ggml.tokens arr[str,151936] = ["!", "\"", "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 17: tokenizer.ggml.token_type arr[i32,151936] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 18: tokenizer.ggml.merges arr[str,151387] = ["Ġ Ġ", "ĠĠ ĠĠ", "i n", "Ġ t",...

llama_model_loader: - kv 19: tokenizer.ggml.bos_token_id u32 = 151646

llama_model_loader: - kv 20: tokenizer.ggml.eos_token_id u32 = 151643

llama_model_loader: - kv 21: tokenizer.ggml.padding_token_id u32 = 151643

llama_model_loader: - kv 22: tokenizer.ggml.add_bos_token bool = true

llama_model_loader: - kv 23: tokenizer.ggml.add_eos_token bool = false

llama_model_loader: - kv 24: tokenizer.chat_template str = {% if not add_generation_prompt is de...

llama_model_loader: - kv 25: general.quantization_version u32 = 2

llama_model_loader: - type f32: 141 tensors

llama_model_loader: - type q4_K: 169 tensors

llama_model_loader: - type q6_K: 29 tensors

time=2025-02-08T19:23:45.680+08:00 level=INFO source=server.go:589 msg="waiting for server to become available" status="llm server loading model"

llm_load_vocab: special_eos_id is not in special_eog_ids - the tokenizer config may be incorrect

llm_load_vocab: special tokens cache size = 22

llm_load_vocab: token to piece cache size = 0.9310 MB

llm_load_print_meta: format = GGUF V3 (latest)

llm_load_print_meta: arch = qwen2

llm_load_print_meta: vocab type = BPE

llm_load_print_meta: n_vocab = 151936

llm_load_print_meta: n_merges = 151387

llm_load_print_meta: vocab_only = 0

llm_load_print_meta: n_ctx_train = 131072

llm_load_print_meta: n_embd = 1536

llm_load_print_meta: n_layer = 28

llm_load_print_meta: n_head = 12

llm_load_print_meta: n_head_kv = 2

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_swa = 0

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 6

llm_load_print_meta: n_embd_k_gqa = 256

llm_load_print_meta: n_embd_v_gqa = 256

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-06

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: f_logit_scale = 0.0e+00

llm_load_print_meta: n_ff = 8960

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: causal attn = 1

llm_load_print_meta: pooling type = 0

llm_load_print_meta: rope type = 2

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_ctx_orig_yarn = 131072

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: ssm_d_conv = 0

llm_load_print_meta: ssm_d_inner = 0

llm_load_print_meta: ssm_d_state = 0

llm_load_print_meta: ssm_dt_rank = 0

llm_load_print_meta: ssm_dt_b_c_rms = 0

llm_load_print_meta: model type = 1.5B

llm_load_print_meta: model ftype = Q4_K - Medium

llm_load_print_meta: model params = 1.78 B

llm_load_print_meta: model size = 1.04 GiB (5.00 BPW)

llm_load_print_meta: general.name = DeepSeek R1 Distill Qwen 1.5B

llm_load_print_meta: BOS token = 151646 '<|begin▁of▁sentence|>'

llm_load_print_meta: EOS token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: EOT token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: PAD token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: LF token = 148848 'ÄĬ'

llm_load_print_meta: FIM PRE token = 151659 '<|fim_prefix|>'

llm_load_print_meta: FIM SUF token = 151661 '<|fim_suffix|>'

llm_load_print_meta: FIM MID token = 151660 '<|fim_middle|>'

llm_load_print_meta: FIM PAD token = 151662 '<|fim_pad|>'

llm_load_print_meta: FIM REP token = 151663 '<|repo_name|>'

llm_load_print_meta: FIM SEP token = 151664 '<|file_sep|>'

llm_load_print_meta: EOG token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: EOG token = 151662 '<|fim_pad|>'

llm_load_print_meta: EOG token = 151663 '<|repo_name|>'

llm_load_print_meta: EOG token = 151664 '<|file_sep|>'

llm_load_print_meta: max token length = 256

llm_load_tensors: CPU model buffer size = 1059.89 MiB

llama_new_context_with_model: n_seq_max = 4

llama_new_context_with_model: n_ctx = 8192

llama_new_context_with_model: n_ctx_per_seq = 2048

llama_new_context_with_model: n_batch = 2048

llama_new_context_with_model: n_ubatch = 512

llama_new_context_with_model: flash_attn = 0

llama_new_context_with_model: freq_base = 10000.0

llama_new_context_with_model: freq_scale = 1

llama_new_context_with_model: n_ctx_per_seq (2048) < n_ctx_train (131072) -- the full capacity of the model will not be utilized

llama_kv_cache_init: kv_size = 8192, offload = 1, type_k = 'f16', type_v = 'f16', n_layer = 28, can_shift = 1

llama_kv_cache_init: CPU KV buffer size = 224.00 MiB

llama_new_context_with_model: KV self size = 224.00 MiB, K (f16): 112.00 MiB, V (f16): 112.00 MiB

llama_new_context_with_model: CPU output buffer size = 2.34 MiB

llama_new_context_with_model: CPU compute buffer size = 302.75 MiB

llama_new_context_with_model: graph nodes = 986

llama_new_context_with_model: graph splits = 1

time=2025-02-08T19:23:47.939+08:00 level=INFO source=server.go:594 msg="llama runner started in 2.51 seconds"

[GIN] 2025/02/08 - 19:23:47 | 200 | 2.606496945s | 127.0.0.1 | POST "/api/generate"

[GIN] 2025/02/08 - 19:25:23 | 200 | 31.331µs | 127.0.0.1 | HEAD "/"

[GIN] 2025/02/08 - 19:25:23 | 200 | 35.849465ms | 127.0.0.1 | POST "/api/show"

[GIN] 2025/02/08 - 19:25:23 | 200 | 30.903373ms | 127.0.0.1 | POST "/api/generate"

[GIN] 2025/02/08 - 19:27:26 | 200 | 3m6s | 127.0.0.1 | POST "/api/chat"

[GIN] 2025/02/08 - 19:29:04 | 200 | 3m6s | 127.0.0.1 | POST "/api/chat"

time=2025-02-09T20:45:02.540+08:00 level=INFO source=server.go:104 msg="system memory" total="16.0 GiB" free="6.6 GiB" free_swap="0 B"

time=2025-02-09T20:45:02.543+08:00 level=INFO source=memory.go:356 msg="offload to cpu" layers.requested=-1 layers.model=29 layers.offload=0 layers.split="" memory.available="[6.6 GiB]" memory.gpu_overhead="0 B" memory.required.full="1.5 GiB" memory.required.partial="0 B" memory.required.kv="224.0 MiB" memory.required.allocations="[1.5 GiB]" memory.weights.total="976.1 MiB" memory.weights.repeating="793.5 MiB" memory.weights.nonrepeating="182.6 MiB" memory.graph.full="299.8 MiB" memory.graph.partial="482.3 MiB"

time=2025-02-09T20:45:02.562+08:00 level=INFO source=server.go:376 msg="starting llama server" cmd="/usr/local/bin/ollama runner --model /Users/cheng.tang/.ollama/models/blobs/sha256-aabd4debf0c8f08881923f2c25fc0fdeed24435271c2b3e92c4af36704040dbc --ctx-size 8192 --batch-size 512 --threads 6 --no-mmap --parallel 4 --port 50927"

time=2025-02-09T20:45:02.568+08:00 level=INFO source=sched.go:449 msg="loaded runners" count=1

time=2025-02-09T20:45:02.570+08:00 level=INFO source=server.go:555 msg="waiting for llama runner to start responding"

time=2025-02-09T20:45:02.572+08:00 level=INFO source=server.go:589 msg="waiting for server to become available" status="llm server error"

time=2025-02-09T20:45:02.598+08:00 level=INFO source=runner.go:936 msg="starting go runner"

time=2025-02-09T20:45:02.601+08:00 level=INFO source=runner.go:937 msg=system info="CPU : SSE3 = 1 | SSSE3 = 1 | LLAMAFILE = 1 | AARCH64_REPACK = 1 | CPU : SSE3 = 1 | SSSE3 = 1 | LLAMAFILE = 1 | AARCH64_REPACK = 1 | cgo(clang)" threads=6

time=2025-02-09T20:45:02.606+08:00 level=INFO source=runner.go:995 msg="Server listening on 127.0.0.1:50927"

llama_model_loader: loaded meta data with 26 key-value pairs and 339 tensors from /Users/cheng.tang/.ollama/models/blobs/sha256-aabd4debf0c8f08881923f2c25fc0fdeed24435271c2b3e92c4af36704040dbc (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = qwen2

llama_model_loader: - kv 1: general.type str = model

llama_model_loader: - kv 2: general.name str = DeepSeek R1 Distill Qwen 1.5B

llama_model_loader: - kv 3: general.basename str = DeepSeek-R1-Distill-Qwen

llama_model_loader: - kv 4: general.size_label str = 1.5B

llama_model_loader: - kv 5: qwen2.block_count u32 = 28

llama_model_loader: - kv 6: qwen2.context_length u32 = 131072

llama_model_loader: - kv 7: qwen2.embedding_length u32 = 1536

llama_model_loader: - kv 8: qwen2.feed_forward_length u32 = 8960

llama_model_loader: - kv 9: qwen2.attention.head_count u32 = 12

llama_model_loader: - kv 10: qwen2.attention.head_count_kv u32 = 2

llama_model_loader: - kv 11: qwen2.rope.freq_base f32 = 10000.000000

llama_model_loader: - kv 12: qwen2.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 13: general.file_type u32 = 15

llama_model_loader: - kv 14: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 15: tokenizer.ggml.pre str = qwen2

llama_model_loader: - kv 16: tokenizer.ggml.tokens arr[str,151936] = ["!", "\"", "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 17: tokenizer.ggml.token_type arr[i32,151936] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 18: tokenizer.ggml.merges arr[str,151387] = ["Ġ Ġ", "ĠĠ ĠĠ", "i n", "Ġ t",...

llama_model_loader: - kv 19: tokenizer.ggml.bos_token_id u32 = 151646

llama_model_loader: - kv 20: tokenizer.ggml.eos_token_id u32 = 151643

llama_model_loader: - kv 21: tokenizer.ggml.padding_token_id u32 = 151643

llama_model_loader: - kv 22: tokenizer.ggml.add_bos_token bool = true

llama_model_loader: - kv 23: tokenizer.ggml.add_eos_token bool = false

llama_model_loader: - kv 24: tokenizer.chat_template str = {% if not add_generation_prompt is de...

llama_model_loader: - kv 25: general.quantization_version u32 = 2

llama_model_loader: - type f32: 141 tensors

llama_model_loader: - type q4_K: 169 tensors

llama_model_loader: - type q6_K: 29 tensors

time=2025-02-09T20:45:02.833+08:00 level=INFO source=server.go:589 msg="waiting for server to become available" status="llm server loading model"

llm_load_vocab: special_eos_id is not in special_eog_ids - the tokenizer config may be incorrect

llm_load_vocab: special tokens cache size = 22

llm_load_vocab: token to piece cache size = 0.9310 MB

llm_load_print_meta: format = GGUF V3 (latest)

llm_load_print_meta: arch = qwen2

llm_load_print_meta: vocab type = BPE

llm_load_print_meta: n_vocab = 151936

llm_load_print_meta: n_merges = 151387

llm_load_print_meta: vocab_only = 0

llm_load_print_meta: n_ctx_train = 131072

llm_load_print_meta: n_embd = 1536

llm_load_print_meta: n_layer = 28

llm_load_print_meta: n_head = 12

llm_load_print_meta: n_head_kv = 2

llm_load_print_meta: n_rot = 128

llm_load_print_meta: n_swa = 0

llm_load_print_meta: n_embd_head_k = 128

llm_load_print_meta: n_embd_head_v = 128

llm_load_print_meta: n_gqa = 6

llm_load_print_meta: n_embd_k_gqa = 256

llm_load_print_meta: n_embd_v_gqa = 256

llm_load_print_meta: f_norm_eps = 0.0e+00

llm_load_print_meta: f_norm_rms_eps = 1.0e-06

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: f_logit_scale = 0.0e+00

llm_load_print_meta: n_ff = 8960

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: causal attn = 1

llm_load_print_meta: pooling type = 0

llm_load_print_meta: rope type = 2

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_ctx_orig_yarn = 131072

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: ssm_d_conv = 0

llm_load_print_meta: ssm_d_inner = 0

llm_load_print_meta: ssm_d_state = 0

llm_load_print_meta: ssm_dt_rank = 0

llm_load_print_meta: ssm_dt_b_c_rms = 0

llm_load_print_meta: model type = 1.5B

llm_load_print_meta: model ftype = Q4_K - Medium

llm_load_print_meta: model params = 1.78 B

llm_load_print_meta: model size = 1.04 GiB (5.00 BPW)

llm_load_print_meta: general.name = DeepSeek R1 Distill Qwen 1.5B

llm_load_print_meta: BOS token = 151646 '<|begin▁of▁sentence|>'

llm_load_print_meta: EOS token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: EOT token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: PAD token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: LF token = 148848 'ÄĬ'

llm_load_print_meta: FIM PRE token = 151659 '<|fim_prefix|>'

llm_load_print_meta: FIM SUF token = 151661 '<|fim_suffix|>'

llm_load_print_meta: FIM MID token = 151660 '<|fim_middle|>'

llm_load_print_meta: FIM PAD token = 151662 '<|fim_pad|>'

llm_load_print_meta: FIM REP token = 151663 '<|repo_name|>'

llm_load_print_meta: FIM SEP token = 151664 '<|file_sep|>'

llm_load_print_meta: EOG token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: EOG token = 151662 '<|fim_pad|>'

llm_load_print_meta: EOG token = 151663 '<|repo_name|>'

llm_load_print_meta: EOG token = 151664 '<|file_sep|>'

llm_load_print_meta: max token length = 256

llm_load_tensors: CPU model buffer size = 1059.89 MiB

llama_new_context_with_model: n_seq_max = 4

llama_new_context_with_model: n_ctx = 8192

llama_new_context_with_model: n_ctx_per_seq = 2048

llama_new_context_with_model: n_batch = 2048

llama_new_context_with_model: n_ubatch = 512

llama_new_context_with_model: flash_attn = 0

llama_new_context_with_model: freq_base = 10000.0

llama_new_context_with_model: freq_scale = 1

llama_new_context_with_model: n_ctx_per_seq (2048) < n_ctx_train (131072) -- the full capacity of the model will not be utilized

llama_kv_cache_init: kv_size = 8192, offload = 1, type_k = 'f16', type_v = 'f16', n_layer = 28, can_shift = 1

llama_kv_cache_init: CPU KV buffer size = 224.00 MiB

llama_new_context_with_model: KV self size = 224.00 MiB, K (f16): 112.00 MiB, V (f16): 112.00 MiB

llama_new_context_with_model: CPU output buffer size = 2.34 MiB

llama_new_context_with_model: CPU compute buffer size = 302.75 MiB

llama_new_context_with_model: graph nodes = 986

llama_new_context_with_model: graph splits = 1

time=2025-02-09T20:45:05.097+08:00 level=INFO source=server.go:594 msg="llama runner started in 2.53 seconds"

llama_model_loader: loaded meta data with 26 key-value pairs and 339 tensors from /Users/cheng.tang/.ollama/models/blobs/sha256-aabd4debf0c8f08881923f2c25fc0fdeed24435271c2b3e92c4af36704040dbc (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = qwen2

llama_model_loader: - kv 1: general.type str = model

llama_model_loader: - kv 2: general.name str = DeepSeek R1 Distill Qwen 1.5B

llama_model_loader: - kv 3: general.basename str = DeepSeek-R1-Distill-Qwen

llama_model_loader: - kv 4: general.size_label str = 1.5B

llama_model_loader: - kv 5: qwen2.block_count u32 = 28

llama_model_loader: - kv 6: qwen2.context_length u32 = 131072

llama_model_loader: - kv 7: qwen2.embedding_length u32 = 1536

llama_model_loader: - kv 8: qwen2.feed_forward_length u32 = 8960

llama_model_loader: - kv 9: qwen2.attention.head_count u32 = 12

llama_model_loader: - kv 10: qwen2.attention.head_count_kv u32 = 2

llama_model_loader: - kv 11: qwen2.rope.freq_base f32 = 10000.000000

llama_model_loader: - kv 12: qwen2.attention.layer_norm_rms_epsilon f32 = 0.000001

llama_model_loader: - kv 13: general.file_type u32 = 15

llama_model_loader: - kv 14: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 15: tokenizer.ggml.pre str = qwen2

llama_model_loader: - kv 16: tokenizer.ggml.tokens arr[str,151936] = ["!", "\"", "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 17: tokenizer.ggml.token_type arr[i32,151936] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 18: tokenizer.ggml.merges arr[str,151387] = ["Ġ Ġ", "ĠĠ ĠĠ", "i n", "Ġ t",...

llama_model_loader: - kv 19: tokenizer.ggml.bos_token_id u32 = 151646

llama_model_loader: - kv 20: tokenizer.ggml.eos_token_id u32 = 151643

llama_model_loader: - kv 21: tokenizer.ggml.padding_token_id u32 = 151643

llama_model_loader: - kv 22: tokenizer.ggml.add_bos_token bool = true

llama_model_loader: - kv 23: tokenizer.ggml.add_eos_token bool = false

llama_model_loader: - kv 24: tokenizer.chat_template str = {% if not add_generation_prompt is de...

llama_model_loader: - kv 25: general.quantization_version u32 = 2

llama_model_loader: - type f32: 141 tensors

llama_model_loader: - type q4_K: 169 tensors

llama_model_loader: - type q6_K: 29 tensors

llm_load_vocab: special_eos_id is not in special_eog_ids - the tokenizer config may be incorrect

llm_load_vocab: special tokens cache size = 22

llm_load_vocab: token to piece cache size = 0.9310 MB

llm_load_print_meta: format = GGUF V3 (latest)

llm_load_print_meta: arch = qwen2

llm_load_print_meta: vocab type = BPE

llm_load_print_meta: n_vocab = 151936

llm_load_print_meta: n_merges = 151387

llm_load_print_meta: vocab_only = 1

llm_load_print_meta: model type = ?B

llm_load_print_meta: model ftype = all F32

llm_load_print_meta: model params = 1.78 B

llm_load_print_meta: model size = 1.04 GiB (5.00 BPW)

llm_load_print_meta: general.name = DeepSeek R1 Distill Qwen 1.5B

llm_load_print_meta: BOS token = 151646 '<|begin▁of▁sentence|>'

llm_load_print_meta: EOS token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: EOT token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: PAD token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: LF token = 148848 'ÄĬ'

llm_load_print_meta: FIM PRE token = 151659 '<|fim_prefix|>'

llm_load_print_meta: FIM SUF token = 151661 '<|fim_suffix|>'

llm_load_print_meta: FIM MID token = 151660 '<|fim_middle|>'

llm_load_print_meta: FIM PAD token = 151662 '<|fim_pad|>'

llm_load_print_meta: FIM REP token = 151663 '<|repo_name|>'

llm_load_print_meta: FIM SEP token = 151664 '<|file_sep|>'

llm_load_print_meta: EOG token = 151643 '<|end▁of▁sentence|>'

llm_load_print_meta: EOG token = 151662 '<|fim_pad|>'

llm_load_print_meta: EOG token = 151663 '<|repo_name|>'

llm_load_print_meta: EOG token = 151664 '<|file_sep|>'

llm_load_print_meta: max token length = 256

llama_model_load: vocab only - skipping tensors

[GIN] 2025/02/09 - 20:45:36 | 200 | 34.29045016s | 127.0.0.1 | POST "/api/chat"

[GIN] 2025/02/09 - 20:45:52 | 200 | 3.027628ms | 127.0.0.1 | HEAD "/"

[GIN] 2025/02/09 - 20:45:52 | 200 | 36.258285ms | 127.0.0.1 | POST "/api/show"

[GIN] 2025/02/09 - 20:45:52 | 200 | 35.208798ms | 127.0.0.1 | POST "/api/generate"

[GIN] 2025/02/09 - 20:47:47 | 200 | 1m17s | 127.0.0.1 | POST "/api/chat"

[GIN] 2025/02/09 - 20:52:31 | 200 | 2m38s | 127.0.0.1 | POST "/api/chat"

[GIN] 2025/02/09 - 20:53:59 | 200 | 970.529µs | 127.0.0.1 | HEAD "/"

[GIN] 2025/02/09 - 20:53:59 | 200 | 28.457032ms | 127.0.0.1 | POST "/api/show"

[GIN] 2025/02/09 - 20:53:59 | 200 | 26.916128ms | 127.0.0.1 | POST "/api/generate"

[GIN] 2025/02/09 - 20:54:47 | 200 | 45.444670446s | 127.0.0.1 | POST "/api/chat"

[GIN] 2025/02/09 - 20:57:28 | 200 | 22.175µs | 127.0.0.1 | HEAD "/"

[GIN] 2025/02/09 - 20:57:28 | 200 | 22.383853ms | 127.0.0.1 | POST "/api/show"

[GIN] 2025/02/09 - 20:57:28 | 200 | 25.419843ms | 127.0.0.1 | POST "/api/generate"

[GIN] 2025/02/09 - 20:59:00 | 200 | 37.537743059s | 127.0.0.1 | POST "/api/chat"Tips:使用brew services start ollama也可以,只是后面关闭时有些费事。本次未使用此命令。

brew services start ollama使用ollama serve命令,只需要把命令执行的窗口关闭,ollama就关掉了。

step2.2:下载ollama模型:

ollama pull deepseek-r1:1.5b % ollama pull deepseek-r1:1.5b

pulling manifest

pulling aabd4debf0c8... 100% ▕█████████████████████████████████████████████████████████████████████████████████▏ 1.1 GB

pulling 369ca498f347... 100% ▕█████████████████████████████████████████████████████████████████████████████████▏ 387 B

pulling 6e4c38e1172f... 100% ▕█████████████████████████████████████████████████████████████████████████████████▏ 1.1 KB

pulling f4d24e9138dd... 100% ▕█████████████████████████████████████████████████████████████████████████████████▏ 148 B

pulling a85fe2a2e58e... 100% ▕█████████████████████████████████████████████████████████████████████████████████▏ 487 B

verifying sha256 digest

writing manifest

success看到success即代表模型部署成功。

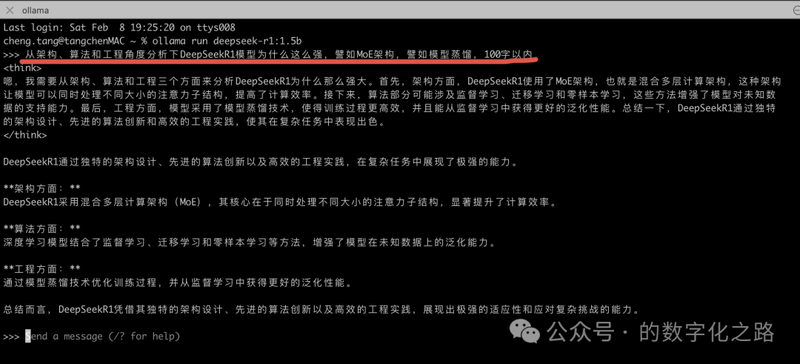

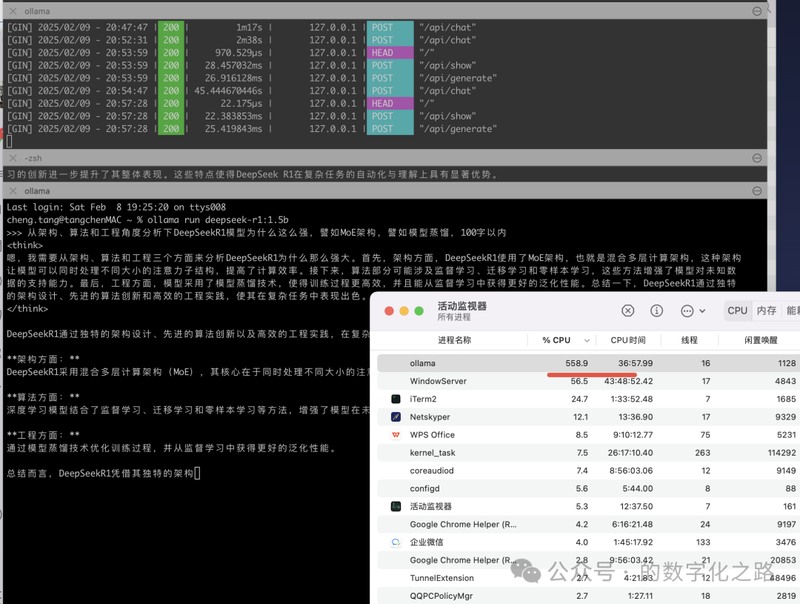

step2.3:运行deepseek

ollama run deepseek-r1:1.5b% ollama run deepseek-r1:1.5b

>>> 从架构、算法和工程角度分析下DeepSeekR1模型为什么这么强,譬如MoE架构,譬如模型蒸馏,100字以内

<think>

嗯,我需要从架构、算法和工程三个方面来分析DeepSeekR1为什么那么强大。首先,架构方面,DeepSeekR1使用了MoE架构,也就是混合多层计算架构,这种架构

让模型可以同时处理不同大小的注意力子结构,提高了计算效率。接下来,算法部分可能涉及监督学习、迁移学习和零样本学习,这些方法增强了模型对未知数

据的支持能力。最后,工程方面,模型采用了模型蒸馏技术,使得训练过程更高效,并且能从监督学习中获得更好的泛化性能。总结一下,DeepSeekR1通过独特

的架构设计、先进的算法创新和高效的工程实践,使其在复杂任务中表现出色。

</think>

DeepSeekR1通过独特的架构设计、先进的算法创新以及高效的工程实践,在复杂任务中展现了极强的能力。

**架构方面:**

DeepSeekR1采用混合多层计算架构(MoE),其核心在于同时处理不同大小的注意力子结构,显著提升了计算效率。

**算法方面:**

深度学习模型结合了监督学习、迁移学习和零样本学习等方法,增强了模型在未知数据上的泛化能力。

**工程方面:**

通过模型蒸馏技术优化训练过程,并从监督学习中获得更好的泛化性能。

总结而言,DeepSeekR1凭借其独特的架构设计、先进的算法创新以及高效的工程实践,展现出极强的适应性和应对复杂挑战的能力。

>>> Send a message (/? for help)

到此,deepseek-r1已经本地化部署成功!

是不是觉得很简单

是的,只要按照步骤来,小白也能轻松搞定。动手做起来吧,说不定你就能发现更多好玩的功能,让这个大模型成为你工作和学习的得力助手呢!要是你在部署过程中遇到什么问题,别忘了留言问我哦,我们一起解决!快去试试吧,开启你的本地大模型之旅!

最后来一起看看DeepSeekR1的其它数据指标:

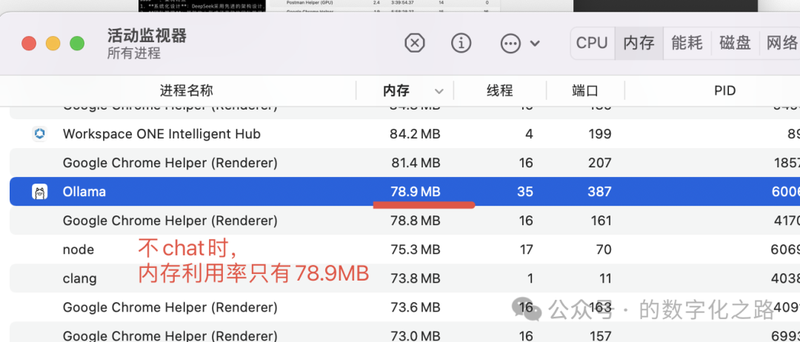

资源消耗情况

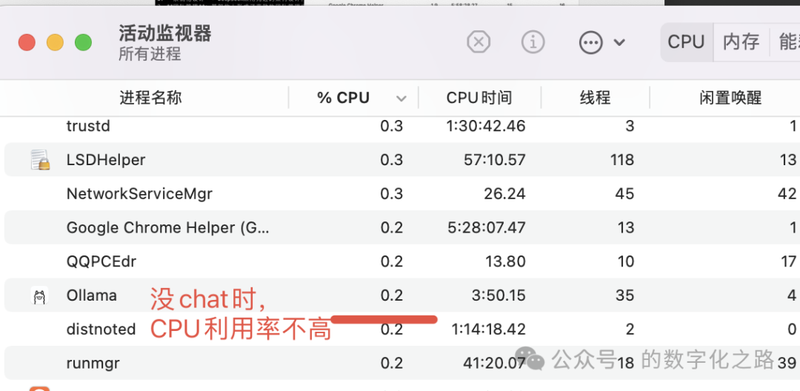

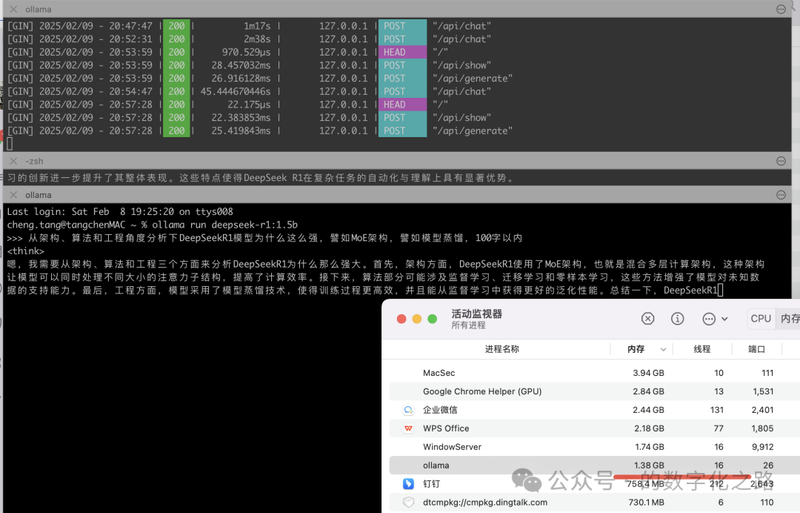

CPU使用情况:

与DeepSeekR1 chat时,6c的CPU基本打满。大模型属于CPU型的任务

内存使用情况:

与DeepSeekR1开启一个chat窗口时,需要1.38GB的内存。